Janik von Rotz

Pokaż treść

5 min read

Working with LLM agents

In the last few days I tinkered with Cortecs and OpenCode . It seems LLM agents are here to stay and I need to deal with them. I admit that I am very skeptic of the AI hype. However, I see a lot of benefits when working with LLM agents in a controlled environment.

People have built great tools and practices. There are many providers that host LLMs or route between multiple providers. The ecosystem as matured and this led me to explore more and put in more effort to understand what is going on.

The goal of this exploration was to find a new coding workflow that includes an LLM agent and fits well into my existing workflow.

As the good engineer that I am, I first wrote down some requirements for the workflow.

Requirements

No unnecessary features

The workflow should not encourage me to build more stuff, but to fix issues and improve features.

Code is technical debt and the theory of code is cognitive debt. Building a lot of features and not using them is producing waste and noise.

No digressions

In my experience, if the agent does not satisfy the requirements, you start explaining the issue over and over again. You often end up in argument and LLM always promises to do better.

This kind of arguing and guessing can be a huge waste of time and defeats the very reason for using an agent. Instead of having an ongoing conversation and a bloated context, it is often easier to start over.

And thus the workflow should encourage me to abort and refine my initial prompt.

Spec-driven prompts

Form experience it is worth to write an elaborate prompt. The initial prompt is a specification of of the new feature or bug to resolve.

Before starting an agent session, a well defined prompt must already exist.

Quick boostrap

I have many software projects with a different layout and framework. Before letting an agent go rough in the repo, I want to provide clear guideliens.

The workflow should make it easy to provide the necessary context for very different projects.

Task file integration

This is the most important requirement. I manage all my software projects with taskfile.build . It is a standard for a bash script and also a library for commands. It allows me to manage and bootstrap very different software projects.

Whatever workflow I am going to build, it can be called from a task file.

Workflow

Now let me show you how the coding workflow looks like.

After iterating on many ideas, practices, scripts and commands, I ended up with three step workflow:

- Setup : Prepare the project to run an LLM agent

- Run : Create and run a prompt

- Finish : Summarize and commit the changes

The commands I use in my workflow are imported from https://taskfile.build/library/#llm .

Setup

First, I enter an new or existing project and run the init project command:

task init-project

This command creates several files and folders. The relevant files are:

-

AGENTS.md: Tempalted guideline for the LLM agent -

README.md: Guide for the user and the LLM agent -

prompts: Folder that contains spec-driven prompts -

task: Templated project-level task file

I update these files according to the context of the project.

Run

I create new prompt file with this command:

task create-prompt "Add prompt commands"

This creates and opens the file

prompts/08_add-prompt-commands.md

in the default editor. Then I update the task section with the specification.

Here is an example of what the prompt file might look like before being executed:

---

title: Add prompt commands

---

# Run 08

Replace the `==` marked instructions in this file while you work on the task.

## Context

Read the `AGENTS.md` and `README.md` to get understanding of the project.

## Task

Add two new commands `create-prompt` and `list-prompt` to the `bin` folder.

The first command asks for a title. The user is expected to enter something like `Title of the prompt`.

Then the command creates a file `prompts/NN_title-of-the-prompt.md` from a template `prompt.md.template`. It writes the title of the prompt into the frontmatter.

Once the file is created it uses the `$EDITOR` to open the file.

The number sequence starts at 01 and continues upwards.

Similar to `list-dotenv` the `list-prompt` commands lists all prompts. It shows a simple table:

```markdown

| ID | title |

| --- | ------------------- |

| 08 | Add prompt commands |

```

The title is retrieved from the frontmatter. The table width should be dynamic.

Add the new commands to the `library.md` in the LLM section. Update the `task.template` with `task update-template`.

## Worklog

==Fill this in as you work on the task==

## Summary

==Fill this once you completed the task==

In order to execute the prompt I launch

opencode

in the root of the project and simply copy-paste the path to the prompt file. Open Code then starts working on the task.

If it does not get the instructions, I either create a new session or give some manual inputs.

Finish

After a few seconds the task has been completed. The worklog and summary section should completed by the agent.

It is crucial to not blindly trust the completeness of your spec or the output of the LLM. So I check the

git diff

. If somethings seems off, I either update the prompt or make manual inputs.

If I am happy with the result, the changes are staged

gaa

and I run

task update-changelog-with-llm

. This will update the

CHANGELOG.md

according to the

keep a changelog

specification.

To commit the changes I use

task commit-with-llm

. This command generates the commit message from the git diff according to the

Conventional Commits

specification.

And that’s it!

Afterthoughts

This workflow works well for trivial and well defined tasks. Once you try more complex task and multiple agents, the quality of the project erodes pretty fast.

Some researchers have demonstrated that LLMs can create entire browsers within a week. Browser engines are well specified, but their creation takes a very long time. The result met all requirements, but was very slow and inefficient.

I believe that the AI hype is a challenge for the entire software industry. It’s not just salespeople who are hyping AI, but also engineers who should know better.

Until the bubble pops, I’ll try my best.

Categories: Large Language ModelTags: llm , augmented , artifical , intelligence

Edit this page

Show statistic for this page

Pokaż treść

3 min read

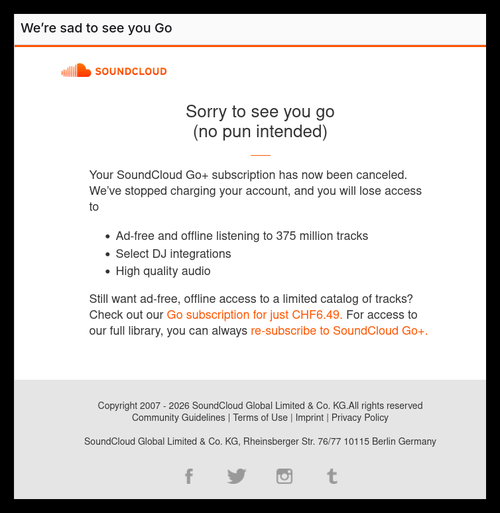

Goodbye Soundcloud, Hello Bandcamp

This post is about enshittification and the decay of the creative web as we know it. It is also about owning and paying your fair share to music artists. I try to recall my last 20 years of listening to music and give some advice for the future.

2007 - Success of .mp3

Setup

Back in 2007 I had a Windows 7 computer and music library was stored on it. The library was synced with iTunes, Zune Player, SkyDrive (now OneDrive) or the Playstation Portable (PSP).

Labor

I put in a lot effort to keep the library well maintained. I updated metadata and added album covers.

Industry

Music was published by record labels. Artists depended on the record label and thus record labels had a lot of power.

2013 - Subscribe to listen

Setup

There internet bandwidth increased and music streaming became feasible. In 2013 Soundcloud was an underdog for listening to music. A lot of listeners had already ditched Apple music in favor of Spotify. On July 2nd 2013 I created my first playlist on Soundcloud .

Labor

With access to an almost infinite music library, the work to maintain my personal music library became obsolete quite quickly. Instead, I created a lot of playlists. Discoverying music an see your taste change over time was fascinating.

Industry

From 2010 to 2020 the entire transition from owning a record to buying a subscription had happened.

2025 - Enter the enshittogenesis

Setup

What is the state of Soundcloud? Since being the Underdog, Soundcloud has become a major player. They have tried various subscription models and rebranded themselfs many times.

Labor

If I recall correctly, these playlists held 10 to 12 songs. When I check them now, only 4 to 6 songs are left. About 50% of songs have been removed from my playlists.

At least you are able to switch streaming platform. You can sync your likes follows, likes and playlists between the streaming providers (Spotify, Tidal, Apple Music or Soundcloud).

Industry

Spotify has changed the music industry… for the worse. They have the monopoly on music streaming and also decide how music is produced. Artists do not get their fair share.

Soundcloud has the same problem as other music streaming providers. You cannot please your shareholders with a 13$ per month subscription and thus have to seek other revenue models.

2026 - Back to owning

Setup

As the title of this post already tells, I cancelled my Soundcloud subscription.

What really tipped my off was the fact, that even though I was a paying customer, Soundcloud tried to milk me for advertising. The Soundcloud app had become the most intrusive app and the number one on the adblock wall of shame.

![]()

Just switching to another provider was not an option. The only option left was Bandcamp .

Labor

It is kind of going back to buying CDs and Vinyls. If you are truly a fan of music and want to support artists, then buying from then directly is the best way.

Industry

Bandcamp goes half-way. It is still a platform and can still be sold and remodeled. But until then, it seems like the best option.

Also I appreciate their stance on AI: https://blog.bandcamp.com/2026/01/13/keeping-bandcamp-human/

Categories: MusicTags: music , soundcloud , bandcamp , enshittification

Edit this page

Show statistic for this page

Pokaż treść

3 min read

2025 Book List

In 2025 I read 9 books and 4 Mangas. As we move towards a more federated and decentralized internet, I now track my reading habits with Bookwyrm . Please join the fediverse and follow me via Bookwyrm.

My favorite book was Unsong by Scott Alexander.

Here is the full 2025 book list:

Books

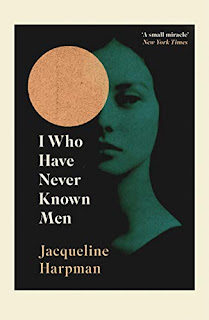

Title: I Who Have Never Known Men

Author: Jacqueline Harpman

Comment: A lost story, that became relevant. Not sure why. Reading the book makes you curious to learn more about the author.

Rating: 4/5

Link:

https://bookwyrm.social/book/416044/s/i-who-have-never-known-men

Finished: Oct. 7, 2025

Title: Die Verkrempelung der Welt

Author: Gabriel Yoran

Comment: This books does a good job at explaining enshittification to non-tech people. The language is simple. I was very impressed by references to projects that I expected to be totally irrelevant outside the tech realm.

Rating: 4/5

Link:

https://bookwyrm.social/book/1987830/s/die-verkrempelung-der-welt

Finished: Oct. 3, 2025

Title: Unsong

Author: Scott Alexander

Comment: A story like now other. Hard to explain without sounding like a schizophrenic delulu. There are fighting scenes, but instead of swords or guns, they cite the bible and talmund. Everything is taken literally.

Rating: 5/5

Link:

https://bookwyrm.social/book/1793156/s/unsong

Finished: Oct. 3, 2025

Title: Dragon’s egg

Author: Robert L. Forward

Comment: Rock-solid sci-fi book. The story explores the idea that life can flourish under very different conditions to ours.

Rating: 3/5

Link:

https://bookwyrm.social/book/42702/s/dragons-egg

Finished: Aug. 9, 2025

Title: The Metamorphosis of Prime Intellect

Author: Roger Williams

Comment: Singularity puts everybody into a simulation. Death is defied, but not the humand condition.

Rating: 3/5

Link:

https://bookwyrm.social/book/199543/s/the-metamorphosis-of-prime-intellect

Finished: July 12, 2025

Title: Lords of Uncreation

Series: The Final Architecture #3

Author: Adrian Tchaikovsky

Comment: The awaited and final book of the series. A space opera that delivers everything you wish for.

Rating: 4/5

Link:

https://bookwyrm.social/book/817598/s/lords-of-uncreation

Finished: April 10, 2025

Title: Reinventing organizations

Author: Frederic Laloux

Comment: Frederic fundamentally changed my understanding of organisations are supposed to work. This book provides the answer to many problems employees face in hierarchical organisations.

Rating: 5/5

Link:

https://bookwyrm.social/book/1011193/s/reinventing-organizations

Finished: April 14, 2025

Title: A Short Stay in Hell

Author: Steven L. Peck

Comment: Don’t underestimate the amount of pages and words it takes to fuck up your imagination. This story did a very good job of triggering the fear of endlessness and dullness.

Rating: 5/5

Link:

https://bookwyrm.social/book/44622/s/a-short-stay-in-hell

Finished: July 7, 2025

Title: Survival of the Richest

Author: Douglas Rushkoff

Comment: If you already read and listen to Rushkoff, this book does offer nothing new.

Rating: 3/5

Link:

https://bookwyrm.social/book/550662/s/survival-of-the-richest

Finished: July 5, 2025

Mangas

Title: Dorohedoro

Author: Oda Eiichiro

Comment: I love the dark atmosphere. Magic is not something beautiful. It smokey, stinky and killing. The story is entwined and well paced. Recommend to everyone.

Rating: 5/5

Link:

https://bookwyrm.social/series/by/294625?series_name=Dorohedoro

Finished: July 1, 2025

Title: Delicious in Dungeon

Author: Ryoko Kui

Comment: The friend that recommended my this manga is a cook by profession. However, this manga is not only about cooking. It is funny dungeon crawler, but has the necessary spice to blend into well cooked story.

Rating: 4/5

Link:

https://bookwyrm.social/series/by/742?series_name=Delicious%20in%20Dungeon

Finished: December 1, 2025

Title: The Fable

Author: Katsuhisa Minami

Comment: The archetype of professional assassins trying the modest life, but then for various reasons are taken accountable for their past. The Fable is group is feared assassins, but all with peculiar personalities.

Rating: 4/5

Link:

https://en.wikipedia.org/wiki/The_Fable

Finished: September 1, 2025

Title: The Fable: The Second Contact

Author: Katsuhisa Minami

Comment: The second part starts with COVID. A difficult time for manga artists and so we see some personal thoughts of the author. But then the story goes back into the well-known style of the first series.

Rating: 4/5

Link:

https://en.wikipedia.org/wiki/The_Fable

Finished: October 1, 2025

Tags: book , list

Edit this page

Show statistic for this page

Pokaż treść

3 min read

Forgejo action to update Kubernetes deployment

In the last post I showed how you can build a Docker image in a Kubernetes cluster using with Forgejo runner. One missing step was the actual deployment of the new Docker image.

In the context of Kubernetes to deploy means to update a deployment config and thus restart the life cycle of pods. There are many ways to do that. The simplest way is to kill a pod. If the

imagePullPolicy

of a container is set to

Always

, Kubernetes will pull the latest image before every container initialization. So whenever a pod is deleted, Kubernetes pulls the image and deploys a new pod.

Deploy kubeconfig

We use this approach to make a deployment update. In order to access the deployment of Kubernetes cluster, a new service account is required. I created another Helm Chart that provides exactly this: https://kubernetes.build/deploymentUpdater/README.html

Deploy the Chart and you’ll get a new account called

deploy

. Export the kubeconfig for this account like this:

https://kubernetes.build/deploymentUpdater/README.html#forgejo-deployment-action

Codeberg setup

Setup the kubeconfig as a secret for your organisation- or personal account. If you are using Codeberg, copen

Settings > Actions > Secrets

and click

Add secret

. Enter

KUBECONFIG_DEPLOY

as name enter the content of the kubeconfig.

Forgejo action

We already reached the final step. For your repo we assume that your a build workflflow, f.g.

.forgejo/workflows/build.yml

. Rename the file to something like

.forgejo/workflows/build-and-deploy.yml

. In addition to the build step, we add a deploy step:

deploy:

name: Deploy to Kubernetes

runs-on: ubuntu-latest

container: catthehacker/ubuntu:act-latest

needs: build

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install kubectl

run: |

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x kubectl

sudo mv kubectl /usr/local/bin/

- name: Create Kubeconfig for Deployment

run: |

mkdir -p $HOME/.kube

echo "${{ secrets.KUBECONFIG_DEPLOY }}" > $HOME/.kube/config

- name: Verify kubectl version

run: kubectl version --client

- name: Deploy to Kubernetes

run: kubectl delete pods -l app=hugo -n <namespace>

The deploy step depends on the build step (

needs: build

). It installs

kubectl

and sets up the kubeconfig to access the cluster. It then deletes all pods for a namespace and a label. Update according to your configuration.

Checkout the full reference of the

build-and-deploy.yml

:

https://codeberg.org/janikvonrotz/janikvonrotz.ch/src/branch/main/.forgejo/workflows/build-and-deploy.yml

Run the action

Commit and push the workflow file. The following should happen:

- Codeberg creates a new action run

- The Forgejo runner receives the task and runs the build step

- Once the build step is completed it starts the deploy step

-

It installs

kubectland adds the kubeconfig - Then it outputs the verion of kubectl

- Finally it deletes the pods matching the label and namespace

- Kubernetes will pull the image and deploy new pods

- Your application has been updated

Tags: kubernetes , forgejo , runner , action

Edit this page

Show statistic for this page

Pokaż treść

3 min read

Deploy Forgejo runner to Kubernetes cluster

In my post about migrating from GitHub to Codeberg I was not able to find a suitable alternative for GitHub actions. With GitHub actions I built and published Docker images. Since the migration I was able find solution.

The solution is a Forgejo runner deployed to a private Kubernetes cluster. It is connected to Codeberg and runs my actions the same way as it did on GitHub. Let me walk you through the setup.

Codeberg setup

For the deployment of the Forgejo runner, I have created a Helm Chart: https://kubernetes.build/forgejoRunner/README.html . If you are familiar with Kubernetes and Helm, the deployment is straightforward.

The Helm Chart requires an instance token to register the runner. This token can be generated and copied from Codeberg. Open https://codeberg.org and click on your profile. Then navigate to Settings > Actions > Runners and click on Create new runner . Copy the registration token.

Kubernetes deployment

Once the runner is deployed, check if it shows up on Codeberg. Open Settings > Actions > Runners and you should see your registered runner.

The Chart also creates a new cluster role called

buildx

. This role shall be used to access the cluster and build the Docker image in Kubernetes enviroment. Export the kubeconfig of this role like this:

https://kubernetes.build/forgejoRunner/README.html#forgejo-buildx-action

Forgejo action

The last step is the migration of the GitHub action and enabling the Codeberg repository to run actions.

Let’s get started by enabling actions on the repo. Open the Settings page of your repo and click on Units > Overview . Enable the Actions option and save the settings. A new tab Actions and a settings page are shown now.

We assume that you have a GitHub action

.github/workflows/build.yml

in your repo. Rename the

.github

folder to

.forgejo

.

A few modifications of the

build.yml

workflow are required to get the same results in the Forgejo runner environment.

First we need to define the build environment. Update the

build.yml

with this

container

key:

...

jobs:

build:

...

container: catthehacker/ubuntu:act-latest

The

ubuntu:act-latest

Docker image replicates the GitHub build environment.

Next we need to grant the Forgejo runner access to the Kubernetes environment.

- name: Checkout code

uses: actions/checkout@v4

- name: Create Kubeconfig for Buildx

run: |

mkdir -p $HOME/.kube

echo "${{ secrets.KUBECONFIG_BUILDX }}" > $HOME/.kube/config

As you can see the the kubeconfig is loaded from a environment secret. Setup this secret in your organisation or personal account. Open

Settings > Actions > Secrets

and click

Add secret

. Enter

KUBECONFIG_BUILDX

as name enter the content of the kubeconfig from the

Codeberg setup

step.

We are almost done. In order to build and publish a Docker image, these steps have to be added as well:

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

with:

driver: kubernetes

driver-opts: |

namespace=codeberg

- name: Login to Docker Registry

uses: docker/login-action@v3

with:

username: janikvonrotz

password: ${{ secrets.DOCKER_PAT }}

The

namespace=codeberg

definition must be match the namespace name of Forgejo runner deployed. Add the

DOCKER_PAT

as a secret to your account.

Checkout the full reference of the

build.yml

:

https://codeberg.org/janikvonrotz/janikvonrotz.ch/src/branch/main/.forgejo/workflows/build-and-deploy.yml

Run the action

Everything is ready to run. Once you commit and push the new

.forgejo/workflows/build.yml

file, the following should happen:

- Codeberg creates a new action run

- The Forgejo runner receives the task and runs the build image

- In the build container the repository is cloned

- The kubeconfig is created and the Docker Buildx enviroment is prepared

- The build container builds the Docker image in the Kubernetes environment

- If successful, the image is pushed to the Docker registry

Next steps

The image is now ready to deploy. How to trigger a Kubernetes deployment from a Forgejo action will be covered in another post.

Credits

I give my thanks to Tobias Brunner . He gave me the initial configs for the setup:

Forgejo Runner Helm Chart: https://git.tbrnt.ch/tobru/gitops-zurrli/src/branch/main/apps/zurrli/forgejo-runner

Build and Deploy action: https://git.tbrnt.ch/tobru/tobrublog/src/branch/main/.forgejo/workflows/build-deploy.yaml

Categories: Continuous IntegrationTags: codeberg , github , forgejo , migration , kubernetes

Edit this page

Show statistic for this page

Pokaż treść

1 min read

Too Big to Care

Wenn Hyperscaler zum Problem werden.

Für einen Anlass des Digital Cluster Uri durfte ich eine kurze Präsentation zum Digitale Souverenität halten. Die Präsentation fokussiert sich auf die sog. Hyperscaler und zeigt auf warum diese ein Problem sind.

Categories: Politics , TechTags: powerup , dependency , hyperscaler , problem

Edit this page

Show statistic for this page

Pokaż treść

3 min read

AI is not abstraction

The concept of abstraction has been applied to software engineering. But it never made sense. Software is flexible. Software can be changed even after it has been put into production.

Software is flexible 🌊

The layer of abstraction in software is always moving. The definition of software abstraction has nothing in common with the definition of abstraction of engineering a physical product.

Software is fluid, always changing, often hard to reproduce, it is configurable, riddled with bugs and most of all - it has to be made sense of. As the code base changes you always have update your mental model of the how the software works.

We intend to write more code than less. We tend to make software more complex than simple. We add more and more features than deprecating them. Everything is done under the umbrella of increasing productivity.

However, developing software does not have to be more productive - it has to be contained.

The bloat is real 🍔

Once you start engineering software with the help of AI you will experience a productivity gain. Not for the overall production of software, but for solving well defined problems in a well known domain. Problems such as bug fixing, commenting, documenting, boiler-plating and explaining are straightforward tasks for AIs.

As a developer you get hooked on running agents in your code base. Solving multiple problems at once. Trying new strategies like git work trees to run multiple agents at the same time.

The sense of productivity and squishing those bugs overwhelms you. This works until you hit the ceiling. The context window, the amount of token to burn through, the invoice, the dependency juggling, the agent getting lost, these are all hard limits that put a stop to the trip.

But what is really happening here? Lets take a step back and have look. Actually you are not improving the software product, you are bloating the code base! It gets bigger and bigger. There are not limitations for adding new features. The computing resources are limitless. Of course this makes sense, you are not getting paid to write smart code, you are getting paid to produce new features.

So this apparent level of abstraction and productivity gain is in reality just bloat. A waste of resources.

Environmental resources 🏭

Producing software with Big Tech services has now a direct link to environmental damage. It is well documented that their data centers are not sustainable and are not using sustainable energy sources. They have become an environmental hazard.

In regards to the climate crisis you have a moral obligation to not use their data centers.

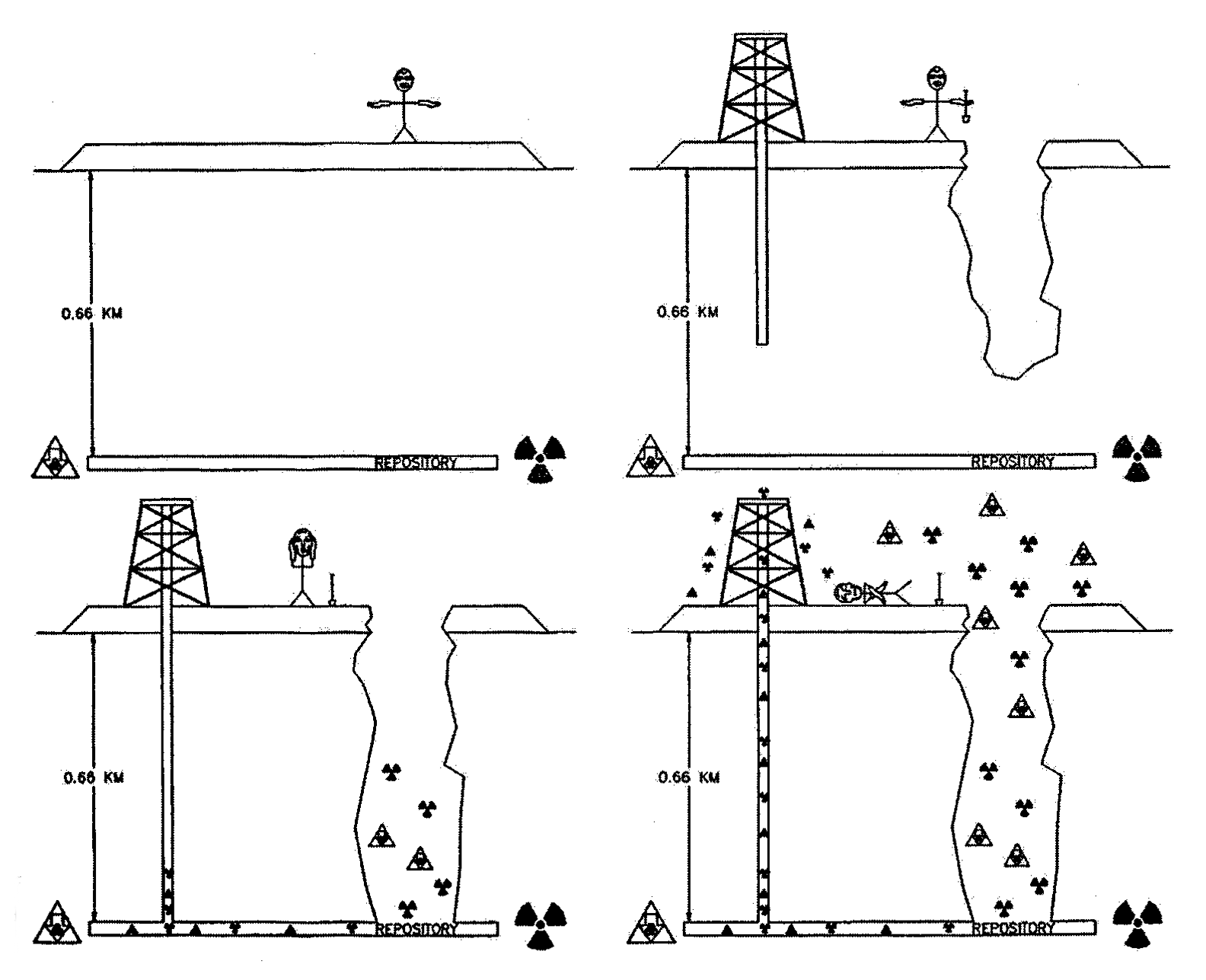

So what if we treat AI as what it is? An environmental hazard.

Safety procedures ☢️

Read this part if you failed to contain the AI and a agent chain reactions has destroyed your code base.

In case your code is radiating and has been polluted by AI-written code, it is time to run the contamination protocols and safety procedures.

First, isolate the AI-written code. Containerize the application and sandbox the execution environment. Add warnings and comments that can be understood years after. Treat your software the same way as you would treat legacy systems.

Once contained, bury the code in the deepest

/dev/null

you can find. Never touch it again, but also never forget about it. Future generations of coders might be able to untangle and decompose the mess that has been created.

Tags: llm , artifical , intelligence , bloat , abstraction , environment

Edit this page

Show statistic for this page

Pokaż treść

3 min read

Disable dependabot alerts for all repos

It is well known that GitHub dependabot alerts and PRs are less than helpful. For hubbers the dependabot is very similar to what clippy was to the office users. It tries to help, but is very distracting for solving the actual problem.

Disabling dependabot alerts for one repo is simple. Got to this page

https://github.com/$GITHUB_USERNAME/$REPO/settings/security_analysis

and click

disable

. But doing this for a 100 or 1000 repos is not feasible. We need a script to automate this process. Let me show you how.

In order to run the scripts you need to create a personal access token to access the GitHub API. Create a token with read/write access to user and repo here: https://github.com/settings/tokens

And then you are ready to configure and run the script. Simply change the

$GITHUB_USERNAME

and set the

$GITHUB_TOKEN

variables.

#!/bin/bash

GITHUB_USERNAME="janikvonrotz"

GITHUB_TOKEN="*******"

GITHUB_TOTAL_REPOS=$(curl -s -H "Authorization: token $GITHUB_TOKEN" "https://api.github.com/user" | jq '.public_repos + .total_private_repos')

GITHUB_PAGINATION=100

GITHUB_NEEDED_PAGES=$(( (GITHUB_TOTAL_REPOS + GITHUB_PAGINATION - 1) / GITHUB_PAGINATION ))

echo "Found $GITHUB_TOTAL_REPOS repos, processing over $GITHUB_NEEDED_PAGES page(s)..."

for (( PAGE=1; PAGE<=GITHUB_NEEDED_PAGES; PAGE++ )); do

REPOS=$(curl -s -H "Authorization: token $GITHUB_TOKEN" \

"https://api.github.com/user/repos?per_page=$GITHUB_PAGINATION&page=$PAGE&type=owner")

echo "$REPOS" | jq -r '.[] | select(.owner.login == "'"$GITHUB_USERNAME"'") | .full_name' | while read REPO_FULL; do

echo "Disabling dependabot for: $REPO_FULL"

# Disable dependabot vulnerability alerts

curl -s -X DELETE \

-H "Authorization: token $GITHUB_TOKEN" \

-H "Accept: application/vnd.github.v3+json" \

"https://api.github.com/repos/$REPO_FULL/vulnerability-alerts" \

-w " -> Status: %{http_code}\n" -o /dev/null

# Disable dependabot automated security fixes (PRs)

curl -s -X DELETE \

-H "Authorization: token $GITHUB_TOKEN" \

-H "Accept: application/vnd.github.v3+json" \

"https://api.github.com/repos/$REPO_FULL/automated-security-fixes" \

-w " -> Status: %{http_code}\n" -o /dev/null

done

done

The script will create list of repos connected to your account. Then it loops through the list disabling the alerts.

The script above only works for personal accounts. If you want to disable the alerts for all repos of an organisation, use this script:

#!/bin/bash

# Configuration

ORG_NAME="Mint-System"

GITHUB_TOKEN="*******"

GITHUB_TOTAL_REPOS=$(curl -s -H "Authorization: token $GITHUB_TOKEN" \

"https://api.github.com/orgs/$ORG_NAME" | jq -r '.public_repos + .total_private_repos')

GITHUB_PAGINATION=100

GITHUB_NEEDED_PAGES=$(( (GITHUB_TOTAL_REPOS + GITHUB_PAGINATION - 1) / GITHUB_PAGINATION ))

echo "Found $GITHUB_TOTAL_REPOS repos in org '$ORG_NAME', processing over $GITHUB_NEEDED_PAGES page(s)..."

# Loop through each page of organization repositories

for (( PAGE=1; PAGE<=GITHUB_NEEDED_PAGES; PAGE++ )); do

REPOS=$(curl -s -H "Authorization: token $GITHUB_TOKEN" \

"https://api.github.com/orgs/$ORG_NAME/repos?per_page=$GITHUB_PAGINATION&page=$PAGE&type=public")

echo "$REPOS" | jq -r '.[] | .full_name' | while read REPO_FULL; do

echo "Disabling dependabot for: $REPO_FULL"

# Disable dependabot vulnerability alerts

curl -s -X DELETE \

-H "Authorization: token $GITHUB_TOKEN" \

-H "Accept: application/vnd.github.v3+json" \

"https://api.github.com/repos/$REPO_FULL/vulnerability-alerts" \

-w " -> Status: %{http_code}\n" -o /dev/null

# Disable dependabot automated security fixes (PRs)

curl -s -X DELETE \

-H "Authorization: token $GITHUB_TOKEN" \

-H "Accept: application/vnd.github.v3+json" \

"https://api.github.com/repos/$REPO_FULL/automated-security-fixes" \

-w " -> Status: %{http_code}\n" -o /dev/null

done

done

You can use the same access token. Simply set the org name and your are good to go.

Categories: Software developmentTags: noice , cancelling , github , microsoft

Edit this page

Show statistic for this page

Pokaż treść

8 min read

Migrate from Github to Codeberg

Since the enshittification of GitHub I decided to become a Berger instead of Hubber . Which I means that I wanted to move all my repos from github.com to codeberg.org.

Running a migration script is easy. But of course there are many details to consider once the repos have been moved. In this post I’ll brief you on my experience and give you details on these challenges:

- GitHub-Integration with Vercel

- Update git repo origin

- Replace GitHub links in repos

- Migrate GitHub Actions

- Redirect people to Codeberg

- Archive GitHub repos

- Mirror to GitHub

Running the migration

As mentioned running a migration script that copies the repos from GitHub to Codeberg is easy.

The heavy work was done by https://github.com/LionyxML/migrate-github-to-codeberg . In order to run this script you need to create a token with read/write access to user, organisation and repo for Codeberg. Create the token here: https://codeberg.org/user/settings/applications Then you do the same for GitHub. Create a token with read/write access to user and repo here: https://github.com/settings/tokens

Clone the miggation script and update the variables. Run the script

./migrate_github_to_codeberg.sh

and you should get an output like this:

>>> Migrating: Bundesverfassung (public)...

Success!

>>> Migrating: Hack4SocialGood (public)...

Success!

>>> Migrating: Quarto (public)...

Success!

>>> Migrating: WebPrototype (public)...

Success!

>>> Migrating: Website (public)...

Success!

>>> Migrating: raspi-and-friends (public)...

Success!

One issue was that the script migrated all repos from all of connected organisations. I had to delete all repos in Codeberg. The following script helped doing so:

#!/bin/bash

CODEBERG_USERNAME="janikvonrotz"

CODEBERG_TOKEN="*******"

repos_response=$(curl -s -f -X GET \

https://codeberg.org/api/v1/users/$CODEBERG_USERNAME/repos \

-H "Authorization: token $CODEBERG_TOKEN")

if [ $? -eq 0 ]; then

repo_names=($(echo "$repos_response" | jq -r '.[] |.name'))

for repo_name in "${repo_names[@]}"; do

echo "Deleting repository $repo_name..."

delete_response=$(curl -s -f -w "%{http_code}" -X DELETE \

https://codeberg.org/api/v1/repos/$CODEBERG_USERNAME/$repo_name \

-H "Authorization: token $CODEBERG_TOKEN")

if [ $delete_response -eq 204 ]; then

echo "Repository $repo_name deleted successfully."

else

echo "Failed to delete repository $repo_name. Status code: $delete_response"

fi

done

else

echo "Failed to retrieve repository list. Status code: $?"

fi

I had to run the script multiple times because of the API paging.

To ensure the migration is done for repos that are assigned tomy account, I had to set owners variable in the script:

OWNERS=(

"janikvonrotz"

)

And with another run

./migrate_github_to_codeberg.sh

the script copied all repos from GitHub to Codeberg.

GitHub-Integration with Vercel

I use Vercel to build and publish my static website. If you use Netlify you probabley face the same problem. Vercel is tightly integrated with GitHub. At the time of writing this post there was no integration for Codeberg available. So it was either stick with GitHub or get rid of the integartion.

I decided to get rid of it and uninstall the Vercel app on GitHub. You can access your GitHub apps here: https://github.com/settings/installations

This will cause the Vercel projects to be disconnected from the GitHub project and thus they will no longer be deployed automatically.

To deploy the websites you can use the Vercel cli. It is simple as cake once you are looged in. Here is an example of such a deployment:

[main][~/taskfile.build]$ vercel --prod

Vercel CLI 44.7.3

🔍 Inspect: https://vercel.com/janik-vonrotz/taskfile-build/4zoKQnE7osV9udRnUyBYdX9EzNif [3s]

✅ Production: https://taskfile-build-5vtumwoja-janik-vonrotz.vercel.app [3s]

2025-08-20T11:20:17.987Z Running build in Washington, D.C., USA (East) – iad1

2025-08-20T11:20:17.988Z Build machine configuration: 2 cores, 8 GB

2025-08-20T11:20:18.006Z Retrieving list of deployment files...

2025-08-20T11:20:18.518Z Downloading 54 deployment files...

2025-08-20T11:20:19.233Z Restored build cache from previous deployment (BuULWL9zESMSfPa8QYN5gyoGA4JP)

2025-08-20T11:20:21.264Z Running "vercel build"

2025-08-20T11:20:21.727Z Vercel CLI 46.0.2

2025-08-20T11:20:22.364Z Detected `pnpm-lock.yaml` 9 which may be generated by [email protected] or [email protected]

2025-08-20T11:20:22.365Z Using [email protected] based on project creation date

2025-08-20T11:20:22.365Z To use [email protected], manually opt in using corepack (https://vercel.com/docs/deployments/configure-a-build#corepack)

2025-08-20T11:20:22.380Z Installing dependencies...

2025-08-20T11:20:23.109Z Lockfile is up to date, resolution step is skipped

2025-08-20T11:20:23.148Z Already up to date

2025-08-20T11:20:23.992Z

2025-08-20T11:20:24.001Z Done in 1.4s using pnpm v9.15.9

2025-08-20T11:20:26.202Z [11ty] Writing ./_site/index.html from ./README.md (liquid)

2025-08-20T11:20:26.208Z [11ty] Benchmark 73ms 19% 1× (Configuration) "@11ty/eleventy/html-transformer" Transform

2025-08-20T11:20:26.208Z [11ty] Copied 4 Wrote 1 file in 0.38 seconds (v3.0.0)

2025-08-20T11:20:26.303Z Build Completed in /vercel/output [4s]

2025-08-20T11:20:26.399Z Deploying outputs...

Of course it is possible to setup a CI job that installs the Vercel cli and runs the prod deployment.

Update git repo origin

For all the local git repos you need to update the remote. The local remote url will still point to github.com and needs to replaced with the coderberg.org url. The following script finds git repos in the home folder and upates the matching url:

#!/bin/bash

OLD_URL="[email protected]:janikvonrotz/"

NEW_URL="[email protected]:janikvonrotz/"

for REPO in $(find "$HOME" -maxdepth 2 -type d -name '.git'); do

DIR=$(dirname "$REPO")

cd "$DIR"

CURRENT_URL=$(git config --get remote.origin.url)

NEW_CURRENT_URL=$(echo "$CURRENT_URL" | sed "s|$OLD_URL|$NEW_URL|")

if [ "$NEW_CURRENT_URL" != "$CURRENT_URL" ]; then

git remote set-url origin "$NEW_CURRENT_URL"

echo "Updated origin URL for $(basename "$(pwd)") to: $NEW_CURRENT_URL"

fi

done

Submodule links in the

.gitmodules

have to be updated manually.

Replace GitHub links in repos

Not only the git remote links to github.com, but also the content stored in the repo. I often add a git clone command to the usage section in the

README.md

. The clone url has to be updated.

I was able to solve this issue with semi-automated approach. I created several search and replace commands that look for github.com link patterns. The search pattern considers external links to github.com that had to be preserved.

On the command line I entered the repo and ran the replacement commands:

# 1. Fix github.com/blob → codeberg.org/src/branch

rg 'github\.com(:|/)(janikvonrotz)/[^/]+/blob/(main|master)' -l | \

xargs sed -i 's|github\.com\(:\|/\)\(janikvonrotz\)/\([^/]\+\)/blob/\(main\|master\)\(/[^"]*\)\?|codeberg.org/\2/\3/src/branch/\4\5|g'

# 2. Fix github.com/tree → codeberg.org/src/branch

rg 'github\.com(:|/)(janikvonrotz)/[^/]+/tree/(main|master)' -l | \

xargs sed -i 's|github\.com\(:\|/\)\(janikvonrotz\)/\([^/]\+\)/tree/\(main\|master\)\(/[^"]*\)\?|codeberg.org/\2/\3/src/branch/\4\5|g'

# 3. Fix raw.githubusercontent.com → codeberg.org/raw/branch

rg 'raw\.githubusercontent\.com/(janikvonrotz)/[^/]+/(main|master)' -l | \

xargs sed -i 's|raw\.githubusercontent\.com/\(janikvonrotz\)/\([^/]\+\)/\(main\|master\)\(/[^"]*\)\?|codeberg.org/\1/\2/raw/branch/\3\4|g'

# 4. Fix bare repo URLs: github.com/user/repo → codeberg.org/user/repo

rg 'github\.com(:|/)janikvonrotz/[^/"?#]+' -l | \

xargs sed -i 's|github\.com\(:\|/\)\(janikvonrotz\)/\([^/"?#]\+\)|codeberg.org/\2/\3|g'

# 5. Fix user profile URLs

rg 'https://github\.com/janikvonrotz\b' -l | \

xargs sed -i 's|https://github\.com/janikvonrotz|https://codeberg.org/janikvonrotz|g'

rg 'https://github\.com/jankvonrotz\b' -l | \

xargs sed -i 's|https://github\.com/jankvonrotz|https://codeberg.org/jankvonrotz|g'

In some cases simply replacing a link was not possible. For example Vuepress linked by default to GitHub and I had to change the

.vuepress/config.js

manually:

repo: 'https://codeberg.org/janikvonrotz/$REPO',

repoLabel: 'Codeberg',

docsBranch: 'main',

Nonetheless replacing the links was easier than expected.

Migrate GitHub Actions

For my personal repos I didn’t run a a lot of GitHub Actions. One of the few was this action:

https://github.com/janikvonrotz/janikvonrotz.ch/blob/main/.github/workflows/build.yml

It builds and pushes a Docker image to Docker registry.

Codeberg offers two ways to run jobs.

There is the Woodpecker CI:

https://docs.codeberg.org/ci/#using-codeberg's-instance-of-woodpecker-ci

And there are Forgejo Actions:

https://docs.codeberg.org/ci/actions/#installing-forgejo-runner

I decided to use Forgejo Action. First I enabled Forgejo Actions in the repos settings. Next I created the

DOCKER_PAT

secret in the user settings:

https://codeberg.org/user/settings/actions/secrets

.

Forgejo Actions support the same YAML spec and thus I only need to rename the

.github

folder to

.forgejo

. I pushed the changes and the first run was created:

https://codeberg.org/janikvonrotz/janikvonrotz.ch/actions/runs/1

However the was waiting for the default Forgejo runner and it seemed not be meant for public use. So I decided to provide my own Forgejo runner.

I created a Helm chart to deploy a Forgejo runner:

https://kubernetes.build/forgejoRunner/README.html

Further I updated the

.forgejo/workflow/build.yml

to use the provided runner. The setup worked but it turned out that most of the CI dependencies are not in the YAML but on the runner. As I understand GitHub Action runners are actual virtual machines on Azure. Replicating these environments is not possible. Also building a multi-platform Docker image with Docker in Docker inside a Kubernetes cluster is not the best idea.

I decided to put this issue on hold. As an alternative I setup a mirror from the Codeberg repo to GitHub (see section below).

Redirect people to Codeberg

It is not possible to redirect repo visitors automatically from GithHub to Codeberg. I decided to update the repo description with a link to the new location. The following script walks through the GitHub repos and updates the description:

CODEBERG_URL="https://codeberg.org/janikvonrotz/"

GITHUB_USERNAME="janikvonrotz"

GITHUB_TOKEN="*******"

GITHUB_PAGINATION=100

github_total_repos=$(curl -s -H "Authorization: token $GITHUB_TOKEN" "https://api.github.com/user" | jq '.public_repos +.total_private_repos')

github_needed_pages=$(( ($github_total_repos + $GITHUB_PAGINATION - 1) / $GITHUB_PAGINATION ))

for ((github_page_counter = 1; github_page_counter <= github_needed_pages; github_page_counter++)); do

repos=$(curl -s -H "Authorization: token $GITHUB_TOKEN" "https://api.github.com/user/repos?per_page=${GITHUB_PAGINATION}&page=${github_page_counter}")

for repo in $(echo "$repos" | jq -r '.[] | select(.owner.login == "'"$GITHUB_USERNAME"'") |.name'); do

echo "Update repo description for $GITHUB_USERNAME/$repo:"

new_description="This repository has been moved to $CODEBERG_URL$repo. Please visit the new location for the latest updates."

curl -X PATCH \

-H "Authorization: token $GITHUB_TOKEN" \

-H "Content-Type: application/json" \

https://api.github.com/repos/$GITHUB_USERNAME/$repo \

-d "{\"description\":\"$new_description\"}"

done

done

Archive GitHub repos

Archiving a repo on GitHub means that it is no longer maintained there. Also the archived repo becomes readonly. With the following script I archived all my GitHub repos:

#!/bin/bash

ARCHIVE_MESSAGE="Repository migrated to Codeberg."

GITHUB_USERNAME="janikvonrotz"

GITHUB_TOKEN="*******"

GITHUB_PAGINATION=100

github_total_repos=$(curl -s -H "Authorization: token $GITHUB_TOKEN" "https://api.github.com/user" | jq '.public_repos +.total_private_repos')

github_needed_pages=$(( ($github_total_repos + $GITHUB_PAGINATION - 1) / $GITHUB_PAGINATION ))

for ((github_page_counter = 1; github_page_counter <= github_needed_pages; github_page_counter++)); do

repos=$(curl -s -H "Authorization: token $GITHUB_TOKEN" "https://api.github.com/user/repos?per_page=${GITHUB_PAGINATION}&page=${github_page_counter}")

for repo in $(echo "$repos" | jq -r '.[] | select(.owner.login == "'"$GITHUB_USERNAME"'") |.name'); do

echo "Archive repo $GITHUB_USERNAME/$repo:"

curl -X PATCH -H "Authorization: token $GITHUB_TOKEN" -H "Content-Type: application/json" https://api.github.com/repos/$GITHUB_USERNAME/$repo -d "{\"archived\":true,\"archive_message\":\"$ARCHIVE_MESSAGE\"}"

done

done

Mirror to GitHub

Mirroring a repo to GitHub solved the problem I had with running the GitHub Actions in the Codeberg environment. It is possible to mirror a Codeberg repo to GitHub and thus you can trigger GitHub Actions with the push of commit.

In the mirror settings of your repo, in my case it was https://codeberg.org/janikvonrotz/janikvonrotz.ch/settings , you can setup a push url. Enter the same credentials as used in the migration script and ensure to tick the push on commit box.

Summary and outlook

Not being able to run my own Forgejo runner was very frustrating. I think CI should not be that hard. I will try to setup a Forgejo runner on a bare metal vm and build my website image with it.

Overall moving my personal repos from GitHub to Codeberg was easy. I did not consider to move the repos for my organisation yet. I think this will be a much more difficult challenge. The organisation repos are integrated deeply into many other projects. The best approach I can think of is mirroring the repos from GitHub to Codeberg and start the transition with one repo and move a long the linked repos.

Categories: Software developmentTags: github , codeberg , migration

Edit this page

Show statistic for this page

Pokaż treść

3 min read

Store Passkeys in KeePassXC

The goal of Passkeys is to replace passwords.

The idea is that instead of remembering a password and entering it to access your account, you own a device that generates a password for you.

Remembering is replaced with Owning.

In this post, I’ll give an example of such a device and how you can create and store a Passkey securely.

YubiKey

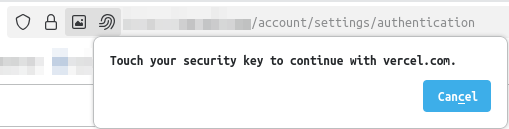

A device that can be used as a Passkey is the YubiKey . Setting it up is actually quite simple. In this example, we have an online account and are going to set up the Passkey. In the security settings of the online account, I click Add Passkey , and the browser prompts for an input:

The YubiKey is plugged in, I touch the YubiKey, and the device is registered. That’s it. From now on, when I log into my account, as a login option, I can choose Passkey. The browser prompts for the input, I touch the YubiKey, and get logged in.

However, at this point, you might ask: What happens when I lose the YubiKey? Can I make a backup of the key?

The short answer is: You cannot create a backup of a YubiKey.

So, the YubiKey might not be the best solution to use as a Passkey. Luckily, there are other providers and devices to manage a Passkey.

KeePassXC

Here, I will show you how you can create and store a Passkey with KeePassXC .

- Install KeePassXC

- Set up a password database

- In the settings, enable the browser integration and select your browser

- Install the KeePassXC browser extension

- Connect the extension to your KeePassXC database

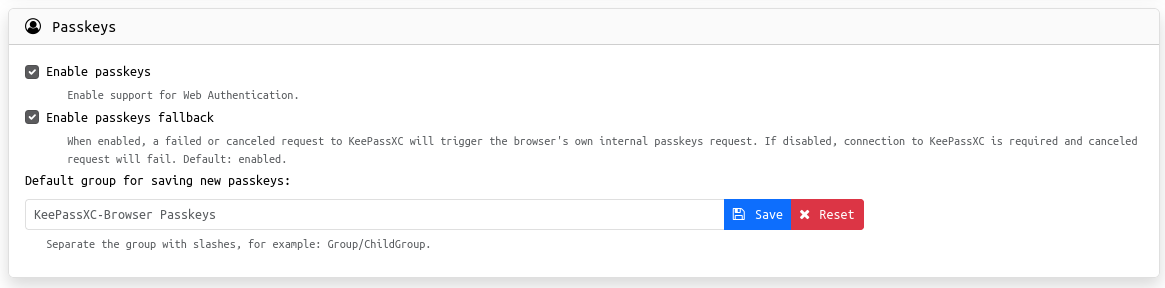

- Enable the Passkeys option in the KeePassXC browser extension settings

- Optionally, set the Default group for saving new passkeys field

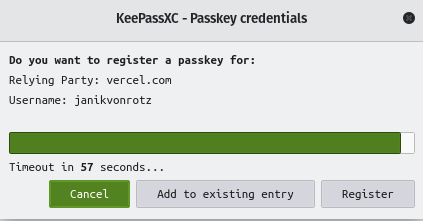

You may need to restart your browser for the changes to take effect. When you register a new Passkey for your account, the KeePassXC extension will open the KeePassXC database locally and show this dialog:

You can click Register , and then you’ll find a Passkey entry in your KeePassXC database.

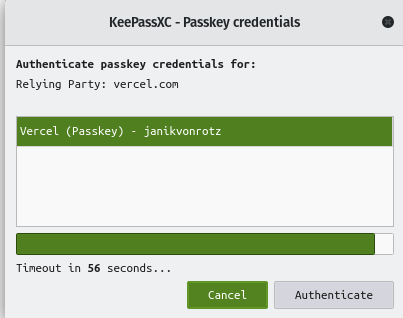

Logging into your account with a Passkey is simple. Select the Passkey option, and the KeePassXC extension will find a matching entry and prompt to authenticate.

Click Authenticate , and you should be logged in.

The KeePassXC database can be backed up, and while Passkey entries can technically be exported, it’s crucial to understand the security implications before doing so.

Recommendations

When registering a Passkey for your account, I recommend using both KeePassXC and YubiKey. The main reason is the mobile browser of your smartphone. Setting up the KeePassXC Passkey solution on your smartphone is currently not possible (as far as I know).

The YubiKey can be plugged into the smartphone’s USB-C port and used to authenticate with any mobile browser. Here is a simple webpage that shows Passkey device and browser compatibility: https://www.passkeys.io/compatible-devices

Categories: SecurityTags: keepass , passkeys , password , manage

Edit this page

Show statistic for this page